OpenAI Says It Wont Steal Data From Those Who Use Its New API to Train ChatGPT

OpenAI, the makers of DALL-E 2 and ChatGPT, has practically become the tech world’s go-to for implementing AI. On Wednesday the company announce it’s making it easier for developers to jam large language models down the pipe of every app and site who wants it. But more than that, it wants to let any company that ends up using its new paid-for system know that it won’t use its data to train its AI model. After all, that’s what it has the rest of us for.

The company announced it was releasing its ChatGPT API called gpt-3.5-turbo. This new model is vastly cheaper than its previous API for its GPT-3 LLM model, and developers get access to updates as they go on. A new “stable” version of ChatGPT should be released in April, according to the company.

This move was already clear for those reading up on Snapchat’s recent implementation of its “My AI” system into its app. Leaked product briefs of OpenAI’s “Foundry” showed that Turbo costs around $78,000 for a three-month commitment, or a $264,000 1-year subscription. It’s nearly 10 times less expensive than the company’s previous davinci model. OpenAI is also making its second iteration of its Whisper AI available in an API, which will allow devs to access the company’s speech-to-text transcription and translation AI.

But what this means for all those services that have tried to unofficially incorporate ChatGPT, is that now they can officially get in on the AI action and then charge users for the privilege. Effectively, every single company out there with a few thousand on hand can open up themselves to all the uses of AI, as well as the strangeness and outright misinformation it can provide.

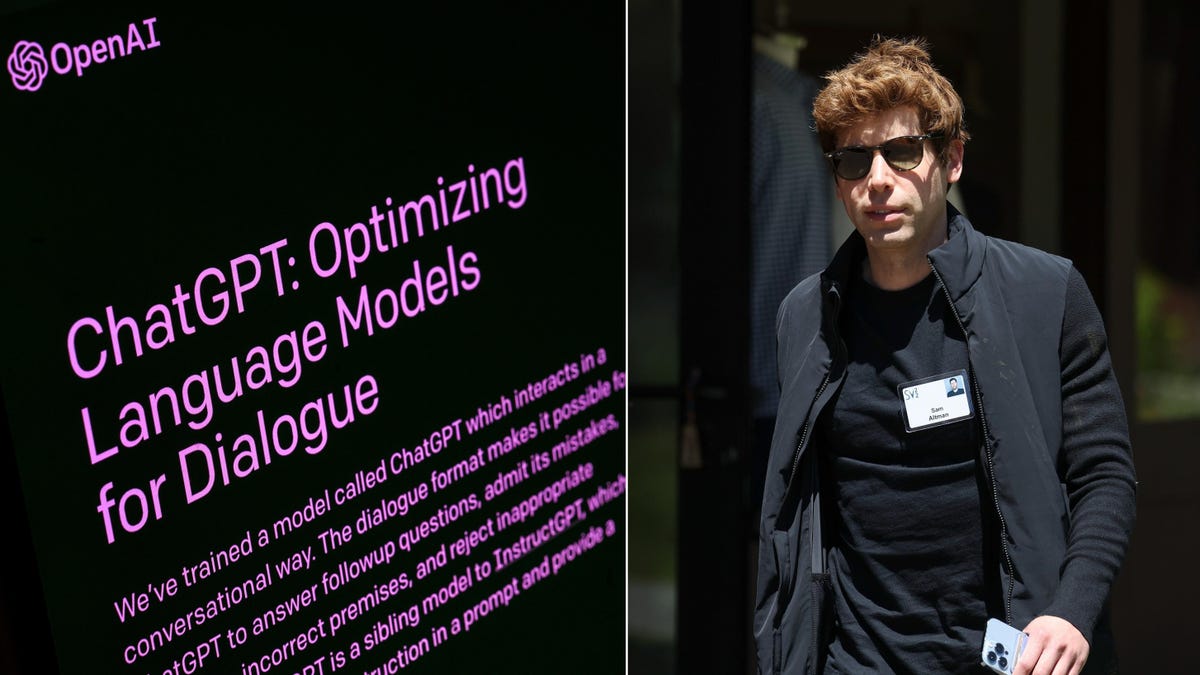

And those who pay don’t just get access to ChatGPT to implement it into all sorts of programs, you also receive a commitment that data submitted through the API will “no longer” be used to train the large language model, unless of course they opt in. CEO Sam Altman reiterated this point in a tweet Wednesday.

G/O Media may get a commission

Any data OpenAI received prior to March 1 “may have been used for improvement if the customer had not previously opted out of sharing data,” according to the terms of service page. The company retains API data for 30 days to identify any “abuse and misuse,” and both OpenAI and contractors have access to that data during that time. Regular users of ChatGPT can continue to expect that OpenAI will use their data to train its AI.

“We believe that AI can provide incredible opportunities and economic empowerment to everyone, and the best way to achieve that is to allow everyone to build with it,” the company wrote in its announcement post. In addition, the company promised that it will work to cut down on outages so its users can keep feeding ChatGPT all their prompts, queries, and ruminations typed into the chatbot.

CEO Sam Altman wants to become the king of AI, despite ChatGPT not being anywhere close to realized

ChatGPT is already extremely popular, having reached 100 million monthly active users by January. Internet analytics company Similarweb reported on Tuesday that ChatGPT is even more popular than Bing with its own AI, even though Microsoft’s service incorporates OpenAI’s technology. Of course, Bing’s new AI is still only limited to select users through an ever-expanding “preview,” but traffic to ChatGPT’s web domain was up 83% in the first 25 days of February compared to January. Bing would be lucky to get the same uptick in traffic in so little time, especially as it has recently rolled back its AI’s capabilities.

With this move, OpenAI is now positioning itself as the major corporate force for all AI development. Of course, the company still has the public sense that it’s just a small startup struggling in the trenches to make amazing breakthroughs in technology. That’s simply is no longer the case. ChatGPT was released in November of last year by the skin of its teeth, as developers later admitted. Barely three months ago, OpenAI CEO Altman was tweeting “ChatGPT is incredibly limited, but good enough at some things to create a misleading impression of greatness.”

Things simply don’t change that fast in just three months, not with technology this size and complexity. Altman tweeted yesterday that “ChatGPT has an ambitious roadmap and is bottlenecked by engineering.” This may be alarming to the folks who are still waiting for the company to truly upgrade its machine learning system so it’s much less likely to spew falsehoods. But it doesn’t matter, because any company big enough to afford it can now officially access this tech.

Altman has other things on his mind, including his broad and incredibly vague goals for real “artificial intelligence,” not these paltry machine learning models. In a blog from last week, Altman wrote that he wanted a real artificial general intelligence, or AGI, to be “a great force multiplier for human ingenuity and creativity.” Of course, AGI doesn’t exist outside the realms of science fiction, but even here he talks about a “slow” rollout to avoid so much disruption to society. The company has not taken this tact with its release and popularization of LLMs.

Experts were quick to pull apart Altman’s grandiose designs on AI. University of Washington professor Emily Bender, who specializes in computational linguistics, said that that Altman was treating machine learning as if it were “magic.”

In a blog post by Michael Atleson, the Federal Trade Commission attorney for the agency’s advertising practices division, the government agency seemed to imply companies should be a little more hesitant to make wondrous claims about the capabilities of AI like large language models.

Atleson wrote that: “If something goes wrong—maybe it fails or yields biased results—you can’t just blame a third-party developer of the technology. And you can’t say you’re not responsible because that technology is a ‘black box’ you can’t understand or didn’t know how to test.”

Even with this API, the technology behind ChatGPT will remain a black box. Knowing tech’s inclination to jump full force onto other controversial technologies like blockchain and crypto, there’s little chance any regulatory threat will stop our new AI overlords.